Understanding the Critical Role of Data Dependencies in Modern Organizations

In today’s data-driven landscape, organizations rely heavily on complex interconnected systems where data flows through multiple pipelines, transformations, and applications. Internal data dependencies represent the relationships between different datasets, tables, views, and processes within an organization’s data ecosystem. Understanding and monitoring these dependencies has become paramount for maintaining data quality, ensuring compliance, and preventing costly system failures.

The exponential growth of data volumes and the increasing complexity of data architectures have made manual tracking of dependencies virtually impossible. When a single change in an upstream system can cascade through dozens of downstream processes, organizations need sophisticated monitoring tools to maintain visibility and control over their data infrastructure.

The Business Impact of Unmonitored Data Dependencies

Organizations that fail to properly monitor their internal data dependencies face significant risks that can impact both operational efficiency and strategic decision-making. Data pipeline failures often occur without warning, leading to incomplete or corrupted datasets that can mislead business intelligence reports and analytics initiatives.

Consider the scenario where a marketing team discovers that their customer segmentation model has been using outdated demographic data for three months due to an undetected failure in an upstream data transformation process. The financial implications of such incidents extend beyond immediate operational costs to include lost opportunities, regulatory compliance issues, and damaged stakeholder confidence.

Common Challenges in Data Dependency Management

- Lack of visibility into data lineage across complex multi-system environments

- Difficulty identifying the root cause of data quality issues

- Time-consuming manual processes for impact analysis

- Inadequate documentation of data transformation logic

- Limited understanding of downstream effects when making system changes

Essential Features of Effective Data Dependency Monitoring Tools

Modern data dependency monitoring solutions must provide comprehensive capabilities that address the multifaceted nature of enterprise data ecosystems. Automated discovery functionality represents perhaps the most critical feature, as manual documentation of dependencies quickly becomes outdated in dynamic environments.

Real-Time Monitoring and Alerting

Effective tools should continuously monitor data pipelines and dependencies, providing immediate alerts when anomalies or failures occur. This proactive approach enables data teams to respond quickly to issues before they propagate throughout the system. Advanced monitoring solutions incorporate machine learning algorithms to establish baseline patterns and detect subtle deviations that might indicate emerging problems.

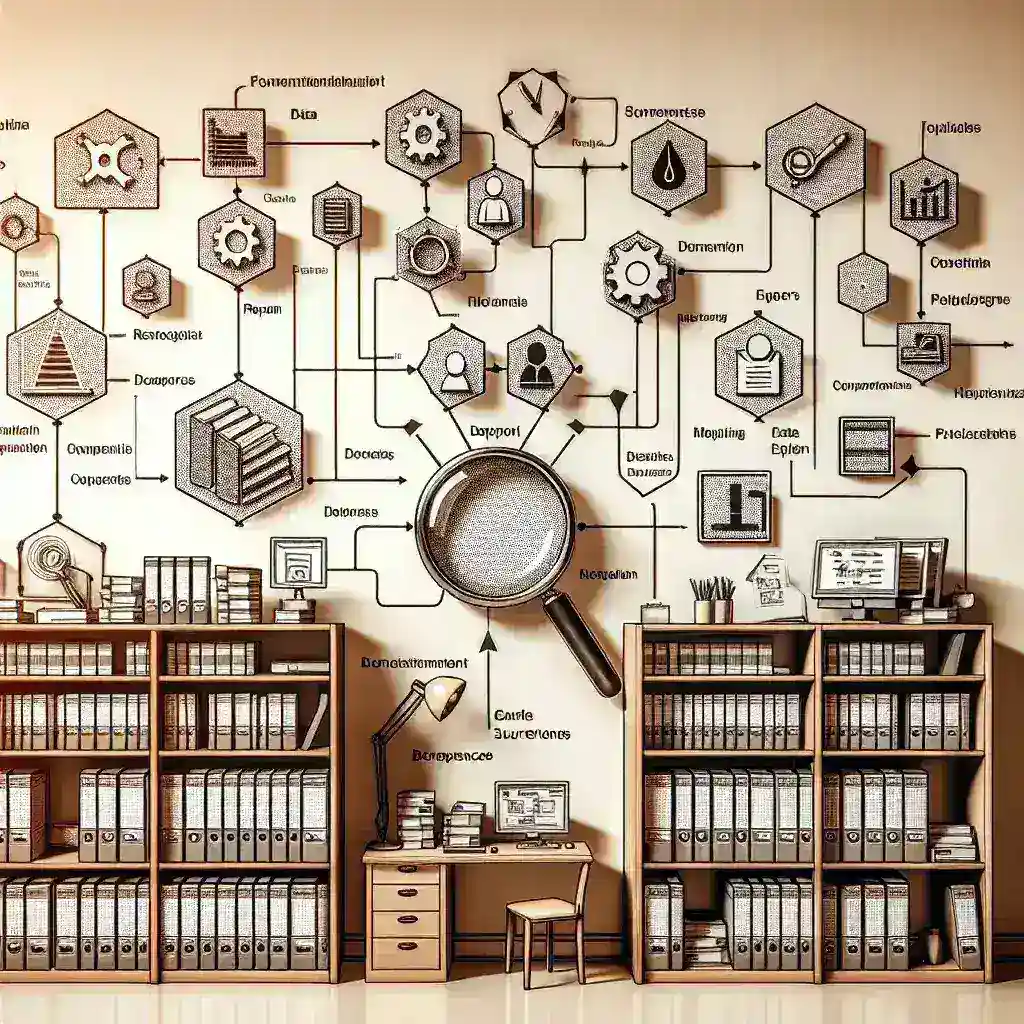

Visual Data Lineage Mapping

Intuitive visualization capabilities allow stakeholders to understand complex data relationships without requiring deep technical expertise. Interactive lineage diagrams should display both forward and backward dependencies, enabling users to trace data from its source through all transformation steps to its final consumption points.

Impact Analysis and Change Management

Before implementing changes to data structures or processes, organizations need to understand the potential downstream impacts. Sophisticated dependency monitoring tools provide comprehensive impact analysis features that identify all affected systems, reports, and applications when changes are proposed.

Leading Tools and Technologies for Data Dependency Monitoring

The market offers numerous solutions for monitoring internal data dependencies, each with unique strengths and capabilities suited to different organizational needs and technical environments.

Enterprise-Grade Solutions

Apache Atlas stands out as a comprehensive open-source data governance platform that provides robust dependency tracking capabilities. Originally developed by Hortonworks, Atlas offers extensive metadata management features and integrates seamlessly with popular big data technologies including Hadoop, Spark, and Kafka.

For organizations seeking commercial solutions, Collibra provides an enterprise data governance platform with advanced lineage tracking capabilities. The platform combines automated discovery with collaborative features that enable business and technical users to contribute to metadata management efforts.

Cloud-Native Monitoring Solutions

Cloud service providers have developed specialized tools tailored to their respective ecosystems. AWS Glue Data Catalog provides dependency tracking for data assets within Amazon Web Services environments, while Google Cloud Data Catalog offers similar functionality for Google Cloud Platform users.

These cloud-native solutions benefit from tight integration with other platform services, enabling comprehensive monitoring across diverse data processing and storage services. However, organizations with multi-cloud or hybrid environments may require additional tools to achieve complete visibility.

Specialized Lineage and Monitoring Platforms

DataHub, originally developed by LinkedIn and now maintained as an open-source project, provides a modern approach to data discovery and lineage tracking. The platform emphasizes developer-friendly APIs and supports real-time metadata updates, making it particularly suitable for organizations with rapidly evolving data architectures.

Informatica Enterprise Data Catalog leverages artificial intelligence to automate the discovery and classification of data assets while providing comprehensive lineage tracking capabilities. The platform’s machine learning algorithms can identify relationships and dependencies that might be missed by traditional rule-based approaches.

Implementation Strategies and Best Practices

Successfully implementing data dependency monitoring requires careful planning and consideration of organizational factors beyond technology selection. Stakeholder alignment represents a critical success factor, as effective monitoring requires collaboration between data engineers, business analysts, and domain experts.

Phased Rollout Approach

Organizations should consider implementing dependency monitoring in phases, starting with the most critical data assets and gradually expanding coverage. This approach allows teams to develop expertise with the chosen tools while demonstrating value to stakeholders through early wins.

Initial phases typically focus on core business intelligence and reporting systems where data quality issues have the most immediate impact. As teams gain confidence and experience, monitoring can be extended to cover operational systems, data lakes, and experimental analytics environments.

Integration with Existing Workflows

Effective monitoring tools should integrate seamlessly with existing development and deployment workflows. This includes integration with version control systems, continuous integration pipelines, and change management processes. When dependency monitoring becomes a natural part of standard workflows, teams are more likely to maintain accurate metadata and respond appropriately to alerts.

Measuring Success and Continuous Improvement

Organizations must establish clear metrics to evaluate the effectiveness of their data dependency monitoring initiatives. Mean time to detection and mean time to resolution for data quality issues provide quantitative measures of improvement in operational efficiency.

Beyond operational metrics, organizations should track business impact indicators such as the accuracy of business intelligence reports, compliance audit results, and stakeholder confidence in data-driven decision making. Regular assessment of these metrics enables continuous refinement of monitoring strategies and tool configurations.

Building a Culture of Data Observability

Technology alone cannot solve data dependency challenges; organizations must cultivate a culture where data observability is valued and practiced consistently. This involves training programs for technical teams, clear governance policies, and recognition systems that reward proactive monitoring and metadata management.

Regular training sessions should cover both technical aspects of using monitoring tools and business concepts related to data quality and governance. When team members understand how their work contributes to broader organizational objectives, they are more likely to embrace monitoring practices as essential rather than burdensome.

Future Trends and Emerging Technologies

The field of data dependency monitoring continues to evolve rapidly, driven by advances in artificial intelligence, machine learning, and distributed computing technologies. Automated remediation represents an emerging trend where monitoring systems not only detect issues but also implement corrective actions without human intervention.

Machine learning algorithms are becoming increasingly sophisticated at predicting potential dependency failures before they occur, enabling truly proactive rather than reactive monitoring approaches. These predictive capabilities will become particularly valuable as organizations continue to increase their reliance on real-time data processing and automated decision-making systems.

Integration with DataOps and MLOps Practices

The growing adoption of DataOps and MLOps methodologies is driving demand for monitoring tools that integrate seamlessly with automated deployment and testing workflows. Future solutions will likely provide deeper integration with continuous integration and continuous deployment (CI/CD) pipelines, enabling automated testing of data dependencies as part of standard development processes.

Organizations that invest in comprehensive data dependency monitoring today will be better positioned to take advantage of these emerging capabilities as they become available. The foundation of accurate metadata and established monitoring practices provides the necessary groundwork for more advanced automation and predictive analytics features.

Conclusion: Building Resilient Data Ecosystems

Effective monitoring of internal data dependencies represents a critical capability for organizations seeking to maintain reliable, high-quality data ecosystems. The tools and practices discussed in this guide provide a foundation for achieving comprehensive visibility into complex data relationships while enabling proactive management of data quality and system reliability.

Success in this area requires more than selecting the right technology; it demands a holistic approach that encompasses people, processes, and technology working in harmony. Organizations that invest in building strong data observability capabilities will find themselves better equipped to navigate the challenges of an increasingly data-driven business environment while capitalizing on opportunities for innovation and growth.

As data volumes continue to grow and systems become more complex, the importance of sophisticated dependency monitoring will only increase. Organizations that establish robust monitoring practices today will have a significant competitive advantage in leveraging their data assets for strategic decision-making and operational excellence.

Leave a Reply